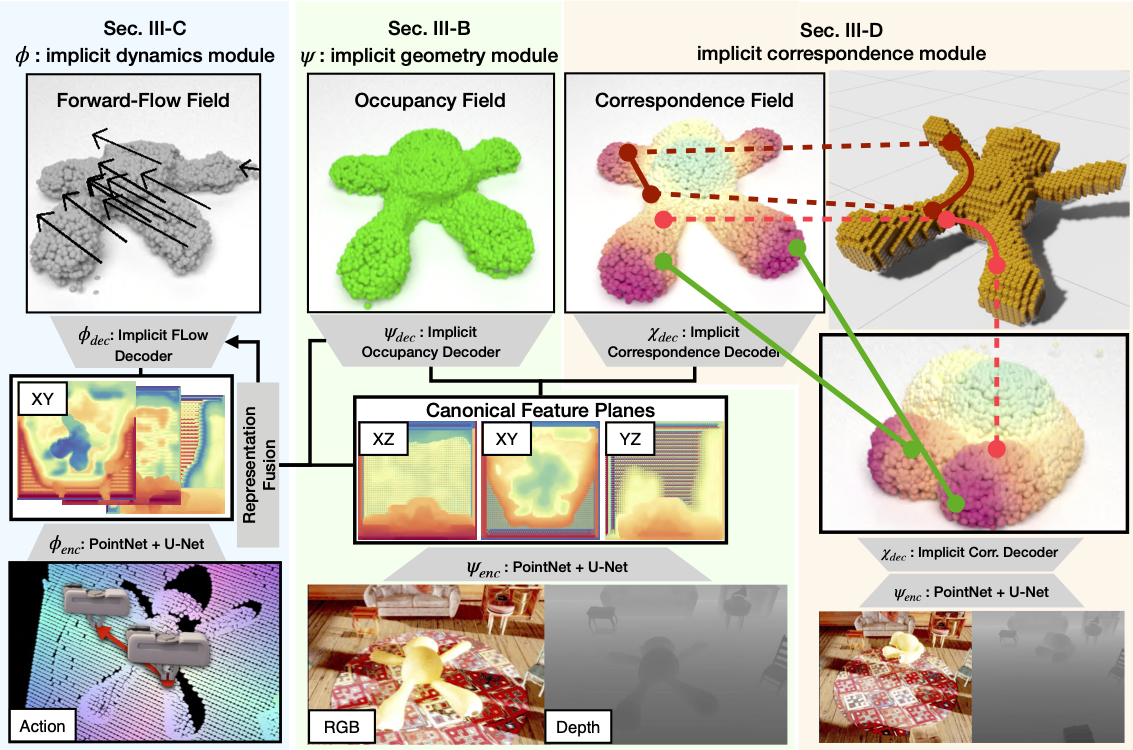

DeformNet: Free-Form Deformation Network for 3D Shape Reconstruction from a Single Image

Abstract

3D reconstruction from a single image is a key problem in multiple applications ranging from robotic manipulation to augmented reality. Prior methods have tackled this problem through generative models which predict 3D reconstructions as voxels or point clouds. However, these methods can be computationally expensive and miss fine shape details. We introduce a new differentiable layer for 3D data deformation and use it in DeformNet to learn free-form deformations usable on multiple 3D data formats. DeformNet takes an image input, searches the nearest shape template from the database, and deforms the template to match the query image. We evaluate our approach on the ShapeNet database and show that - (a) Free-Form Deformation is a powerful new building block for Deep Learning models that manipulate 3D data (b) DeformNet uses this FFD layer combined with shape retrieval for smooth and detail-preserving 3D reconstruction of qualitatively plausible point clouds with respect to a single query image (c) compared to other state-of-the-art 3D reconstruction methods, DeformNet quantitatively matches or outperforms their benchmarks by significant margins.

Leave a Comment