Weakly Supervised 3D Reconstruction with Manifold Constraint

Abstract

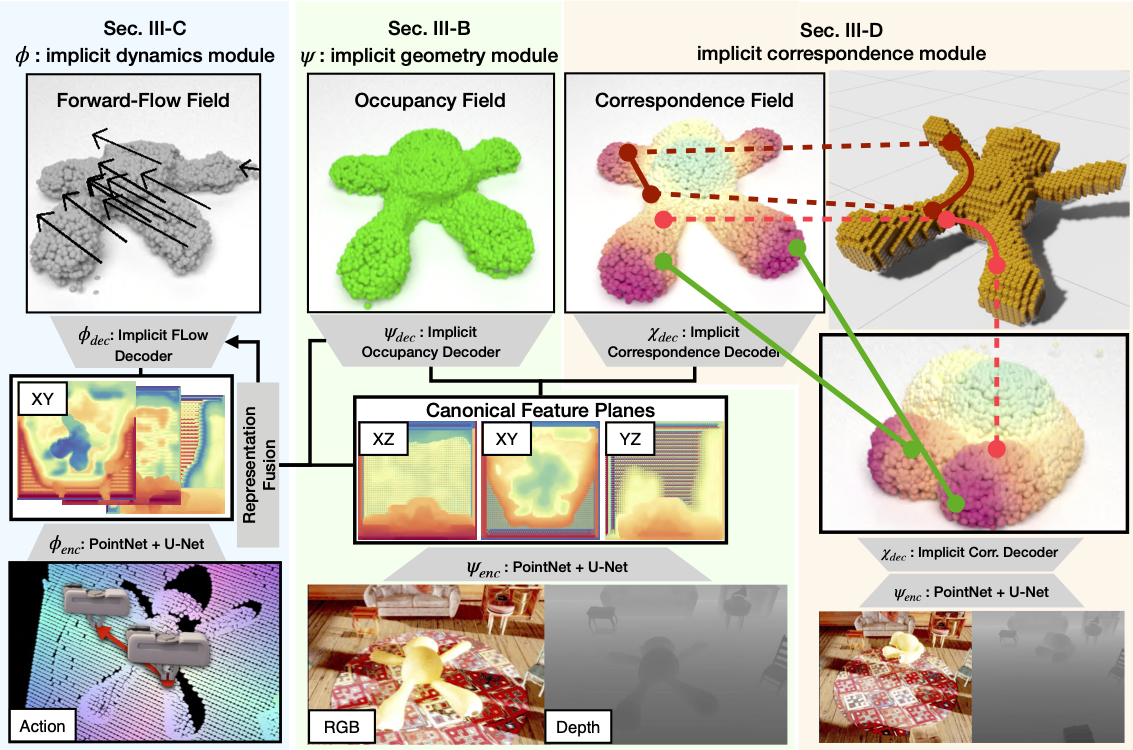

Volumetric 3D reconstruction has witnessed a significant progress in performance through the use of deep neural network based methods that address some of the limitations of traditional reconstruction algorithms. However, this increase in performance requires large scale annotations of 2D/3D data. This paper introduces a novel generative model for volumetric 3D reconstruction, Weakly supervised Generative Adversarial Network (WS-GAN) which reduces reliance on expensive 3D supervision. WS-GAN takes an input image, a sparse set of 2D object masks with respective camera parameters, and an unmatched 3D model as inputs during training. WS-GAN uses a learned encoding as input to a conditional 3D-model generator trained alongside a discriminator, which is constrained to the manifold of realistic 3D shapes. We bridge the representation gap between 2D masks and 3D volumes through a perspective raytrace pooling layer, that enables perspective projection and allows backpropagation. We evaluate WS-GAN on ShapeNet, ObjectNet and Stanford Online Product dataset for reconstruction with single-view and multi-view cases in both synthetic and real images. We compare our method to voxel carving and prior work with full 3D supervision. Additionally, we also demonstrate that the learned feature representation is semantically meaningful through interpolation and manipulation in input space.

Leave a Comment